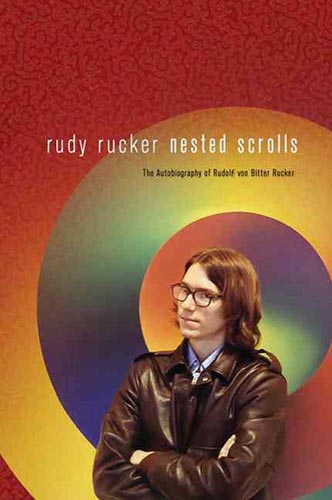

I'm guest-blogging on Charlie's Diary for a week or two, putting up about six posts. I'm doing this for fun, and to drum up interest in my autobiography, Nested Scrolls, which is just out from Tor Books in the US, and has been out from PS Publishing in the UK since June.

I'll put my first post today, and then come back with my second post on December 27. And I probably won't be delving into the comment threads until Dec 28.

Preminary Pleasantries.

I'm happy to be on Charlie's blog as he's one of my favorite writers. For me, Accelerando was a huge breakthrough. Before Accelerando, SF writers were kind of worried about how to write about the aftermath of the Singularity. And then Charlie showed us how. Pile on the miracles and keep a straight face. And Accelerando was literate and funny. Once I'd read it, I was ready to write my own postsingular novel--in fact I called it Postsingular.

Something I especially liked in Accelerando was Charlie's way of saving fuel on interstellar flights. Send the people in the form of simulations running inside a computer/spaceship the size of a Coke can. And the people on his tiny ship are well aware of their nature--they jokingly refer to themselves as "pigs in cyberspace." Lovely.

Origins of the Digital Immortality Trope

Stepping back from the postsingular future, I'm going to spend my first couple of posts talking about digital immortality, both as an SF trope, and as a near-future real-world tech product. And then I'll do some posts about writing science-fiction, and about some of my recent ideas.

My early cyberpunk SF novel Software of 1982 is, I like to argue, one of the first books in which we see humans uploading their personalities as software for android bodies. Feel free to comment if you think I'm wrong about this. I'm ready for you. But, as I mentioned, I won't be on the comments until about December 28.

For now, let me say a little more about the mind-uploading trope, and suggest a way of faking digital immortality in the near future...

As I recall, thirty years ago, uploading your mind to a computer was not at all an idea that was "in the air." Before I was able to write Software, I needed to work out a whole theory for the uploading process in my non-fiction book Infinity and the Mind, which also appeared in 1982.

I will confess that I had a certain failure of vision when I wrote Software. At that point, computers were physically large, and some of them needed to be kept very cold. So I had my main character Cobb's personality living in a large, refrigerated computer that was housed in an ice-cream truck that followed his nimble android body around!

Computer scientist Hans Moravec further popularized the notion of personality uploads in his Mind Children of 1988. And by now, the notion of a personality upload is so commonplace that people have trouble understanding that it was initially a difficult thing to imagine.

Having thought about the idea for so long, I've naturally developed some opinions about it. In practice, copying a brain would be very hard, for the brain isn't in digital form. The brain's information is stored in the geometry of its axons, dendrites and synapses, in the ongoing biochemical balances of its chemicals, and in the fleeting flow of its electrical currents.

By the way, in my novel Software, the robots who specialized in extracting people's personality software do so by eating their brains--which made for some nice lively scenes. Why is this book not a movie yet?

Another side-issue regarding personality uploads is that our personalities are not in fact localized within our brains. We live in our entire bodies--in our hearts, in our muscles, in our organs. So you'd really want to be uploading a blueprint-plus-operating system for the entire body, not just the brain. But then you're shading into space-travel via direct matter transmission, as in the movie The Fly. Which is quite a different trope.

But it's really the personality-as-computer-code idea that we want to be talking about here, so let's focus on that. Without getting into the messy biological aspects of having a human body, how might we best model our selves as assemblages of digital data and software?

The Lifebox Method for Faking Digital Immortality

My feeling is that we're on the verge of having a technology for making such digital models of human personalities. I use the word lifebox for these computer models. My idea is simply that, instead of using some unknown tech to upload a subject's personality into a computer, I'll settle for a reasonably good facsimile of the subject that lives either online or, if feasible, inside an android. The lifebox data and code is to be created with today's ordinary off-she-shelf tech, and with the participation of the subject.

The method that I envision is that a lifebox contains a hefty database with a goodly selection of a person's memories, along with a rich set of hyperlinks among the memories. And you give it a bit of a front-end so you can interact with it. This is an idea I've been talking about for a few years--see, for instance, my tome on the meaning of computation: The Lifebox, the Seashell, and the Soul.

A number of tech firms are already working on various types of lifebox products, and the concept has been discussed in an article "Cyberspace When You're Dead" in the New York Times, as well as the recent Personal Digital Archiving conference in San Francisco. I have some links and background on the idea in my blog post, "Digital Immortality Again." The subject is continually advancing and evolving.

Your lifebox will have a kind of browser software with a search engine capable of returning reasonable links into your database when prompted by spoken or written questions from other users. These might be friends, lovers or business partners checking you out, or perhaps grandchildren wanting to know what you were like.

Your lifebox will give other people a reasonably good impression of having a conversation with you. Their questions are combed for trigger words to access the lifebox information. A lifebox doesn't pretend to be an intelligent program; we don't expect it to reason about problems proposed to it. A lifebox is really just some compact digital memory with a little extra software.

One marketing motivation for a lifebox is that old duffers like me always want to write down their life story, and with a lifebox they don't have to write, they can get by with just talking. The hard thing about creating your life story is that your recollections aren't linear; they're a tangled banyan tree of branches that split and merge. I myself was in fact able to write an autobiography Nested Scrolls--because I've spent my whole life as a professional writer. But for someone trying to start out from scratch, the task is overwhelming.

Rather than trying to create a linear narrative, the lifebox uses hypertext links to hook together everything you tell it--with no preferred sequence. And then your eventual audience can interact with your stories, interrupting and asking questions.

Who hasn't had the experience of studying an author's writings or an artist's works so intensely that you begin to at least imagine that you can think like them? The effect of the lifebox would be to make this type of software immortality accessible to a wider range of people.

So that's it for today, and I'll be back with more about the lifebox after Yule. Have a good one!

Hi Rudy,

Look forward to hearing more from you in the post Festive apocalypse.

There's something like the Lifebox in Eric Brown's 'Fall of Tartarus' where the lead character uses it to converse with his missing/dead/who-knows-what father.

I've always chuckled at people claiming 'first' on comments threads, but the timing on this means I might just make it (who am I trying to kid...)

Welcome Rudy, looking forward to all your insights.

"As I recall, thirty years ago, uploading your mind to a computer was not at all an idea that was "in the air.""

OTOH I recall discussing this very subject at a London Mensa party with Madsen Pirie in 1977

D'oh!

Be an interesting twist on wills, interactive, in your own voice. And a possible way to annoy from beyond the grave.

The tricky aspect of such a thing would be programming it to know what to censor depending on who it's talking to. Because none of us is exactly the same person to everyone we meet, and one of the things we do on a day-to-day basis is manage the separate relationships and their various access levels.

What if you don't want your wife in Chino to find out about the one in Cherokee? Perhaps you'd rather not tell your grandkids all about the BDSM parties you enjoyed so much? Maybe the stories you share with your friends in Alcoholics Anonymous aren't for your co-workers ears?

It's a pretty fancy way to write a memoir, but memoirs are never complete. IMHO it's not immortality unless it knows all your secrets--and keeps them in the same way that you would if you were alive.

I’ve been grappling with the idea of human neural/ mindstate uploads for some years. I wonder if it is necessary to map the entire body. Yes, our personalities are reflected in our whole physical make-up, but then that is represented in the brain. If someone is paralysed from the neck down they could still retain the same personality, and – I would argue – have a sense of an entire body if projected convincingly in a virtual environment. Alternately, what many writers on AI argue is that a disembodied intelligence could never properly replicate a human’s because they never developed human form (and likewise a human brain grown without a body – now there’s a thought!). Anyway, the problem with scanning memory is not just a resolution problem but that the memories themselves are unreliable: they’re a reconstructed version of events (so is there a philosophical question about their validity to a person’s life?). Then your lifebox would contain your personality in as much as it showed how you memorised events, which – usually self-favouring – would give a psychological insight into the ego. Apropos, I watched Black Mirror (repeated tonight) on UK chan4 that illustrated a solution of storing sensory info on a chip and how that could lead to all kinds of problems.

BTW, Rudy, i’m currently on about page 715 of the Ware Tetralogy, reading it on my 7”screen netbook!

"My feeling is that we're on the verge of having a technology for making such digital models of human personalities"

Depends on what you mean by "model". If you mean "bunch of searchable content, pictures, text, narrative" then yes, could do this now, facebook and google almost do this. If you mean "something you can have a coherent conversation with that behaves like you did" no, not even remotely close. That's an AI basically. Do that, hello singularity.

Thanks for the thus far friendly comments. Unhyolyguy, yes, the hard part is animating the lifebox, putting the ghost in the machine. I'll say a bit more this in my next post. This said, if you have a large enough data base, you really can have your lifebox fake being you quite well. But I don't see my lifebox writing my next novel.

Re. your observation that facebook and google can almost make a lifebox, yes, that's a point I'm always making when I talk to businnes types. I really do see this a near future product. An iphone lfebox app.

Evan, having your lifebox show different faces of you to different people would certainly be feasible. Though you might have to hand curate who's on what lists. But the lifebox ware would remember the threads with your various interlocutors in any casel.

Hm... I look forward to your AI insights (have we got to the stage of 'It's AI, but not as we know it" yet?) and writing SF... as it is I'm still recovering from a trip to Spaceland... brain still hurts on that one...

Randomly, I've just been reading Alastair Reynolds' "Revelation Space" books for the first time, and that was an idea in there that struck me as rather clever - the idea that they have "Alpha level" and "Beta level" simulations of people.

A Beta is pretty much exactly what you just described. It's not really sentient, it's just an extremely complex series of decision trees and recorded behaviors that can mimic the person well enough to pass a Turing test. (An Alpha is the standard transhuman-ideal mind upload.)

I thought it was kind of interesting to get into the idea that even if we demonstrably do have technology capable of producing true computer intellect, that still doesn't answer the question of whether the particular software you're talking to right now is intelligent or just a good fake.

Iain Banks Culture novels, I think Excession, one of the drones/ knife missiles goes into some detail about it's different levels of brain power and personality, from a basic computer chip type through a modified organic one to a small mind.

And regarding singularity, that surely only comes about if the AI is capable of self upgrading for as much as it wants and such super intelligent being does manage to break/ re-invent the laws of physics.

http://sf-encyclopedia.com/entry/upload has a few early references to mind upload. (Warning, major time-suck potential)

"Interesting early examples are "Point of No Return" (July 1963 NEW WORLDS) by Philip E HIGH, where soldiers operating WEAPON systems by remote telefactoring suffer inadvertent personality transfer to their machines; The Ring of Ritornel (1968) by Charles L HARNESS, in which portions of an unwilling human's brain are physically transferred into a MUSIC-making computer – the result being strictly speaking a CYBORG; "The Schematic Man" (January 1969 PLAYBOY) by Frederik POHL, whose protagonist encodes himself as a computer program; Midsummer Century (April 1972 F&SF; 1972) by James BLISH; Catchworld (1975) by Chris BOYCE; and "Fireship" (December 1978 ANALOG) by Joan D VINGE."

(please pardon all the upcasing, it's how the SF-E marks hyperlinks)

Hi Rudy, welcome.

I agree that we could have a lifebox database and a some sort of expert system frontend that would be able to fake at least some kinds of conversations with people who didn't know the original subject very well sometime in the next 10 or 20 years. And that's certainly no less a form of immortality than what a writer gets by leaving behind her body of work. But my impression is that what people mean when they talk about digital immortaility is mind-uploading, resulting in a consciousness in the machine that can believe (and I use that word with all its connotations of subjectivity) that it is a faithful (in some senses yet to be determined) copy of the original. And I am dubious that that is even possible, and am sure that if it is, it's orders of magnitude harder to implement than the lifebox.

The difference between a lifebox and an upload is the difference between a model and a simulation. A model is expected to be approximate, and may even behave incorrectly in some areas, because we don't care about modeling those areas. That's because we use a model to understand or reason about the original. But a simulation is often used for the results of its behavior, sometimes replacing the original in a larger context, and so we care that its behavior match the original's. As long as we're clear what we've got and how we're using it, there isn't a problem, but if we lose track of that, we can get situations like Sherry Turkel described in her study of robot pets in families: where the robot's behavior breaks down unexpectedly in situations the animal would handle naturally, and the humans mistakenly try to understand the broken behavior as somehow consistent with the internal structure of the robot's mind.

And what for ? Don't we have enough old people everywhere around us ? Your Lifebox (or any other "ghost in the shell" thing) would be a curse on younger generations. Nowadays people inherit when they reach 60 or more, when they have retired. Historicaly inheritance was to marry, grow children and keep the farm you lived in to give your children a weathier life. If something like your life box comes up, one day it will be near enough to the dead it is made of to actualy BE this person (or been seen as)and ... noone will ever inherit again. and our children will be slaves to death in an horrible thanatocracy. It gives me the thrills... I hope that future will never occur (but you are free to use it in a novel if you want) Another point : what is a human being ? Someone who evolves, isn't it? But how could your Lifebox (TM) would ever be regarded as "someone" ? I'll read your biography, but I will never speak to you after your death ... Two last things : 1)I totally agree with you about "accelerando" (as Charlie isn't there, we can speak frankly, he won't notice)It's his best book and probably one of the best in the last 20 years. 2) As you probably notice, English is not my natural language. And you haven't been translated to French since 1986. Why ?

Joelfinkle, that's an impressive list of early uses of ideas similar to the uploading-your-mind-into-a-computer trope. I'll have to check out the Fredrik Pohl THE SCHEMATIC MAN in particular. I hadn't thought of checking the SF Encyclopedia on this point.

I see that I'm delving into the comments already, even though I'd meant to not get drawn in yet.

If I'm not around for awhile, you can always try "talking" to Rudy's Lifebox until I get back :)

It's online at http://www.rudyrucker.com/blog/rudys-lifebox/

I am relieved however to see that the Science Fiction Encyclopedia does list SOFTWARE among it's early examples of the uploading trope.

Tarkinlyon, I take your point that people probably would not be very interested in accessing lifebox emulations of the deceased. There's a related issue regarding corpsicles. Who's going to bother to thaw them out and reanimate them, even if it's possible? Enough's enough. Let the new generation take the stage.

I've had this feeling very strongly on the sad occasions of cleaning out the houses of deceased older family members. So many papers, and they even have papers from their ancestors. At some point, it just becomes humus for the endlessly sprouting forest floor.

Re. my publications in France, I seem to have brief bursts of popularity in countries, and then it fades away. I've been hot in Japan, Germany, Italy, and Spain at various points. Some slight interest in France, I think there is still a thick omnibus of mine available there.

Someone has already mentioned Alastair Reynold's beta-level simulations from Revelation Space, and I think the way that Kim Stanley Robinson treats AI is not far removed either- you have some brick of human-seeming program that follows you around, is sufficiently familiar with your preferences to answer your phone, and can answer historical questions, in your voice, to others by dint of being around all the time.

It's also pretty much what we are lead to believe about the upload Cylons in the shortlived "Caprica" series- some sort of proto-AI is cut loose to scour any and all available data, from social networks to medical records, to pattern itself into a replica. (Now, it's implied that gathering neurological data is an important step forward, that the resulting entities are genuine AI persons, and that they are not the same people as their progenitors, but it's still in the same vein.) Dang, I miss that show.

It's actually something I've been pondering in implementation for a while now- pseudopersonality as outgrowth of Baynesian spam filtration. If I have something that's constantly asking my opinion, or making choices and submitting them for my approval, or other related processes, and it able to tag my answers and my daily expressions with the right metadata, it's not hard to imagine that one could arrive at a passable ghost self- one that doesn't have to "do" any of the hard AI stuff, or the equally-if-not-more-so problematic and fraught migration from meat, but still might be useful to have around. We have mechanisms for delegating our opinions already- the simple fact we can presume other people's opinions is a start.

I wonder if there's a way to merge a LifeBox data set with an ELIZA code base.... http://webseitz.fluxent.com/wiki/LifeBox

Hi Rudy! Long time reader. Just to chime in about the First to Print with the idea of uploading consciousness. I think prior to your work the idea was considered, but the "uploading" part was glossed over. Simulacron-3 and Tunnel Under the World come to mind. Closer in time to your work is Pohl's Heechee books. The second one, 'Beyond the Blue Event Horizon' (1980) explicitly has characters that have been (partially) uploaded into an alien computer. If you expand beyond print and into film, we can refer to Tron (1982) which depicted the direct digiizing of a human being (via laser).

Yes, Bill Seitz, you would want some simple AI to smooth over the quotes from you that are dug-out from your data base. Something like Eliza or, more accurately, like the kinds of programs being entered in the annual Turing Imitation Game contest.

I had an article about Lifebox stuff in the shortlived webzine H++, edited by no less a man than R. U. Sirius. See http://hplusmagazine.com/2010/12/21/lifebox-immortality/

So how does the Lifebox know the relative importance of a particular idea or event in someone's life? How does it know the issues which link one event to another event? How does the lifebox mimic the human tendency to link ideas/objects/events inappropriately, or to make one link relatively stronger than another event for no obvious reason? Obviously there are clear paths through a consciousness that are composed of these links.

I'm currently wrestling with similar issues because I'm writing a website meant to make studying/teaching history easier. Like the lifebox, it's designed around the idea that everything is hyperlinked together, (though the history site isn't designed to mimic a historical person.)

A historical event is connected to a place, a time, various people, objects and organisms, and of course a historical event is connected to multiple ideas, themes and memes. All these things are linked together in one gigantic mesh, which can be followed in space, time, and along various memetic pathways much like a human life...

I've been experimenting with the idea of a "node," which is a database entry which contains its own links to other entries, and is thus self-navigating without the need for a database search. The idea is to make each object in the database have "awareness" of its links to other nodes so that history can be self-organizing in ways that are not necessarily linear and compartmentalized in rows and columns. I think this approach would work very well for your Lifebox.

The list is VERY incomplete. To give the first missing story which comes to my mind: The Werewolf Principle (1967) by Clifford D. Simak. Many centuries in teh future there is bank of uploaded human minds.

Begin quote1. He took the paper from underneath his arm and opened it. The House had been right, he saw. There was little news. Three men had been newly nominated for the Intelligence Depository, to join all those other selected humans whose thoughts and personalities, knowledge and intelligence, had, over the last three hundred years, been impressed into the massive mind bank which carried in its cores the amassed beliefs and thoughts of the world's most intellectual humans. End quote1

The minds in the bank are more than accumulations of personal data. They are all sentient and they can exhibit desire, initiative. They can phone out to people "outside".

Begin quote2. "No," said Blake. "Go ahead. It makes no difference to me. A voice, concise and frosty, speaking flat words, with no hint of intonation, said: "This is the mind of Theodore Roberts speaking. You are Andrew Blake?" "Yes," said Blake. ... End quote2

There is no such thing as standardization in terminology SF lit. This must have fooled the person doing the metadata and/or indexing for the SF database. For instance, Simak does not use the term uploading to describe the transfer of minds to the depository, and in that novel an android is not a robot with a more or less human looking body, but a biological construct, a bit like the replicants in Blade Runner but with notable differences. By the way, SImak wrote this a year before Dick wrote Do Androids Dream of Electric Sheep?

On the uploaded minds, I seem to recall that The City and the Stars by Arthur C Clarke had people being stored in the central computer. That would date the trope back to 1956.

What if you don't want your wife in Chino to find out about the one in Cherokee? Perhaps you'd rather not tell your grandkids all about the BDSM parties you enjoyed so much?

Flip that question around: you're dead, why would you care?

I'm assuming a somewhat sociopathic personality in the first place to be doing stuff you really don't want other people to know about; once you're beyond retribution, though, why does it matter? Apart from the tax authorities and the offshore account you opened to stash a little something away for your heirs? (This is less of a problem in the USA than in places like the UK, where inheritance tax at up to 40% kicks in on estates with valuations below the price of the average house.)

Hmm. The whole lifebox thing opens up several cans of worms ...

I recall being well impressed with Catchworld. However, at the time I did not notice the gaping plot holes - just overcome by the originality of it.

There's a Gibson novel (I'm thinking Idoru) where this high-ranking Yakuza consults his ancestors, who were transformed into AI and integrated into a sort of Shinto shrine. Could be a great way to remember your departed ancestors, I'm thinking, not to mention a treasure-trove for historians. Although the privacy concerns outlined by Evan @6 would still be a problem...

"There's a related issue regarding corpsicles. Who's going to bother to thaw them out and reanimate them, even if it's possible? "

Their relatives? Those frozen now will likely still have grandchildren alive when the tech becomes possible. Very much so if anti-aging tech is created during the next 50 years.

"I'm assuming a somewhat sociopathic personality in the first place to be doing stuff you really don't want other people to know about; once you're beyond retribution, though, why does it matter? "

Beyond retribution... and you wanted a reason for the simulation argument?

You are wrong about it. Igor Rosokhovatsky wrote about it in his Sigom/Sihom series at least since late 1960s...

Mona Lisa Overdrive.

I'd argue it's simply our current society's attempt to come to terms with death - after all, mummification in ancient Egypt was exactly as 'real' a way to ensure life after death for them as Lifeboxes, cryogenics and uploads are for us. But of course, this time we've cracked it, surely...

Anyhoo. 1951, Ray Bradbury's 'The City' (part of 'The Illustrated Man') - humans are dissected and recreated artificially, memories and all, but reprogrammed to act as suicide bombers. Kind of an upload.

You can see a similar concept in the Galactic Center series by Gregory Benford, starting in Great Sky River.

"chips that contain imprinted personalities from the distant past - Aspects and Faces-"

The simplier Faces are more in line with the Lifebox, Benford goes on to have more advanced Personalities in novels later in the series.

I'm not really convinced that a summation of an advanced social network with some sort of Turing compliant interface would ever mimic me accurately. Reason being is that a large part of social interaction doesn't reflect what one really thinks, I may 'like' a series of pictures by a friend of pigeons in the park whilst secretly thinking "why don't they get of their a* and do something constructive with their media degree??"

As/if social networks become even more intertwined with our everyday lives (perhaps similar to Charlie's lifelog idea of recording sight, sound, position etc 24/7) an appropriate lifebox could be created but the software that has to turn all that data into a Ryan-chatbot would have to be extremely good at working out my personality from my actions, especially when a large part of those actions are ambiguous as to what they indicate and may be deliberately deceptive.

Awesome! I always wanted to meet the Finn.

I suppose it might be an interesting variation on the Turing test theme, trying to mimic a specific person using an exhaustive database of his or her output, but it feels to me that this is to true AI or uploading what predicting the weather with an almanac and a window is to having a datalink to a weather satellite + archived data.

Though I suppose a Cristopher Hitchens bot would be a handy thing to whip out whenever arguing with a creationist.

"...if you have a large enough data base, you really can have your lifebox fake being you quite well."

As a long-time (now former) AI researcher, I would suggest reading about Doug Lenat's Cyc project. Its basic premise has and remains the very thing you purpose above. Namely, build a large enough expert system and it will be able to fake being a person. Cyc was originally pitched as a ten year project, but continue to live through corporate and government sponorship, but has yet to produce anything close to a lifebox.

On a related note, has anyone ever written a novel/short story about a first attempt at creating a digital life copy/life-box? I think the failures would make for an interesting plot.

I'd program my lifebox to put especially juicy or personal anecdotes behind riddles, and I'd leave behind heirlooms that hinted at the answers.

Apropos of nothing much, I just got Charlie's WIRELESS collection from the library and am contentedly reading it. "Missle Gap" is so great. I've always like the idea of a flat really big world.

Keith, yeah, you could do a good story with a sinister failed lifebox-driven android in it. "Dad...Dad?"

I see lots of quibbles on my SOFTWARE claim of early uploading into android credit. That's okay. We went over this on my blog once, but I can't find the link, lacking a really good memory search tool. In any case, SOFTWARE was the first novel exactly like SOFTWARE!

Zachariah, you put your finger on why we are so interested in various forms of digital immortality...we don't want to die.

When I started writing SOFTWARE I was interested in the philosophical question of whether a physical and mental copy of me would be "me." I decided to just have the copy think that it IS me and let it go at that.

These days, as I get older, I'm more comfortable with the notion of my personal obliteration by death and various types of digital immortality don't seem as important as they once did.

I guess the reason I brought up SOFTWARE and the lifebox as my first post was simply to stir up some conversation.

One more thought regarding the fear of death. It makes sense for your whole life to be tightly attuned to avoiding death, it's the survival instinct that evolution has honed into us. I'd argue that it's a MISAPPLICATION of this instinct that makes us so desperate to avoid the ultimate, final, and unavoidable death that comes at the end.

Better to accept it, I think.

Rudy, I think you're in the minority when you say you've grown more accepting as you got older. I'm 65, and I'm with you on this, but many of my friends and relations seem to be getting more and more upset about getting closer to the end. Except my aunt, who'll be 100 in March; she's had a pretty full life, and done a lot of the things she wanted to, that seems to help. I think she's just hanging on until the odometer ticks over, so she can say she made it 100. Which isn't a bad way to go out, in my view.

There's an SF story I read a few years ago, written recently, in which an aging software developer with terminal cancer builds a lifebox for himself and gets his friend, a lawyer, to wrap a court up in the philosophical tangles around identity and selfhood, so that it allows him to die by assisted suicide. Then when his lifebox is informed of his death, it tells the lawyer that it's not really a copy of his friend, just a fancy recording, and shuts itself down. I can't remember the title or author though.

Alain, I would make the point that we're talking about two slightly different tropes.

The older one is that of the mind in the computer that appears as a talking face on a wall...beloved by Hollywood. If the talking face was at one time a living person, this is in some sense this is akin to the ghost trope.

The newer trope that I was exploring in SOFTWARE is that of a human mind that is animating an android robot.

AI people sometimes say that being embedded in a body is an esseantial part of being a conscious, intelligent agent.

Involuntary death is evil. Being "natural" does not make the situation better. Not accepting death is what drives medicine, not to mention the Transhumanist agenda. Would anyone here reject a pill that would turn back the biological clock to 20, and opt for old age infirmity and death instead? Or worse, reject such a pill on behalf of everyone else?

Akin to the zombie trope.

If nobody died, we'd be in a an ocean of flesh, no? Well, I guess we'd have to give up having children. Steep price.

As for resurrecting relatives...would you resurrect your father-in-law? Can imagine an SF tale with ten generations of your ancsestors hanging around, and you're forever at the bottom. Drastic measures ensue.

"If nobody died, we'd be in a an ocean of flesh, no? Well, I guess we'd have to give up having children. Steep price."

Not really. We can choose to live in a world with high deathrate and high birthrate. or low deathrate and low birthrate. As for demographics, one study based on Sweden showed a 22% increase in population after 100 years if aging ceased, but birthrate was maintained.

"As for resurrecting relatives...would you resurrect your father-in-law?"

Yes, and my parents and grandparents

Charlie: Sociopathic personality? Really? And yet you wrote this...

http://www.orbitbooks.net/2011/07/15/crime-and-punishment/

I have things about me I don't want other people to know trivially, because they could be used to hurt me. I've done things that, while victimeless, could have gotten me arrested. I'm a member of a minority that's disproportionately targeted for hate crimes of all kinds -- a couple of them, in fact; there are portions of the globe where just entering the local customs border would automatically expose me to capital punishment for the crime of being who and what I am. I'm fortunate that's not the local case, but the point is...can't you imagine some reason why someone might want to keep a fairly significant part of their life and identity as privileged information? It really shouldn't be too hard...

Agreed on the point about post-death disinterest in filtering that, though. I'd rather people not be able to hide from it, and if that means nobody wants to actually use my lifebox because uncomfy, well, I'm literally beyond caring at that point.

My issue isn't about keeping part of your life as privileged information, but about doing so after you are dead.

Now, there is a point that you might well want to do so if disclosing information about your personal life might expose other people to harm (the minority status you're alluding to, I think). But at this point we're talking about something else.

Ah, yes, Cyc. The original project in the early '80s was expected to require a few thousand rules. In 1986 Lenat estimated that the working version would require about 250,000 rules, which is about where it is now (not including several million "assertions" which are not the complex rules used to encode real world knowledge). And it still can't do what the original project planned on: reason intelligently and generally about real world situations without needing additional rules about each one. I doubt it ever will; the idea behind Cyc is not the way humans or animals reason about the world, and it clearly ignores some features of the way we work that would seem to me to be essential.

"Another side-issue regarding personality uploads is that our personalities are not in fact localized within our brains."

Is there evidence for this? I'm on board with the idea that your mind doesn't evolve into a human mind without embodied experience from a normal-ish sensory periphery (no, not excluding Helen Keller from humanity here), but that's not quite the same as the assertion that, at any given state of your mind's evolution, aspects of personality are stored somewhere that isn't the brain. I think the evidence for this would have to be something along the lines of "amputation and peripheral demyelination change personality," but then of course you have to deal with the fact that the person in question has a serious medical condition, so it might be hard to tease apart.

I guess you might get somewhere with the sort of embodied cognition research that shows that holding a pencil in your mouth makes you feel happier (because it forces your mouth to approximate a smile). The personality at the time of upload might be intact, but weird sensory input might change it fairly quickly.

Also, I published a story in the 2007-08 issue of COSMOS about lifeboxes essentially self-organizing on the Internet. I was responsible for the title, which I did not at the time know had been staked out by China Mieville's entry in the Thackery T. Lambshead Pocket Guide to Eccentric & Discredited Diseases; I had nothing to do with the tagline or the illustration.

"Another side-issue regarding personality uploads is that our personalities are not in fact localized within our brains."

Yes, what part of our personality comes from having a body, with all the hormones and aches and pain to which man is heir? At best a simulation of a human personality would be a strange copy, a reflex-machine programmed to react reliably to certain stimuli. It might be a terrifying experience to interact with a ball-less, gut-less version of a loved one. Would such a thing be like an autistic or lobotomized version of the original?

And the real rub about Cyc is that it requires a working knowledge of first order predicate calculus to enter the rules (“Acquiring knowledge by brain surgery,” paraphrasing Lenat). So Rudy, if you think writing about your life is tough, try encoding in sophisticated logic.

Just have to add that I loved your book SOFTWARE, though the robots eating brains, as a means to upload them really grossed me out. You’re right; those scenes would make most zombie movies look tame in comparison. You should talk to Greg Bear about getting it made into a movie. He know more about Hollywood than most SciFi writers, with the exception of Harlan Elision, but who can talk to Harlan, without fear of humiliation and life-long rehabilitative therapy?!!! ;-)

I think you may find that the final outcome can be a significant part of emotional decision making.

Ahem: if I stick a knitting needle through your eye orbit and stir it all about, your personality will demonstrably change. Whereas if I remove one of your kidneys, or one of your legs, you may be (justifiably) annoyed, but you'll still be you. This is the empirical basis for the assumption that mind uploading should focus on the brain.

Dr. Rucker -- DOES anyone have the film option for SOFTWARE?

If not... What would you take for it? :)

SOFTWARE, and now WETWARE which I'm reading now, are the first "cyberpunk" books I've read where characters upload themselves (or get their brains eaten by robots, heheh) and yet don't seem terribly attached tot he idea of doing so in order to gain immortality. This is due to their understanding of more universal truths such as The One (as the boppers call it) and Heaven as Cobb calls his "time between bodies."

If you're really worried about dying -- spend more time trying to understand Buddhism/Taosim/Zen -- it's much more likely to make you feel OK about the end of your physical body, than some possible breakthrough in mind uploading technology.

One of the many fun things about Simak's "The Werewolf Principle" is that he does both tropes, the sort of old "ghost" one of a talking soul with no body and the sort of newer one where an uploaded mind resides within a human looking / nearly human body.

It turns, out, as we read the novel, that Andrew Blake took up his name just for convenience. When he was created as an android / replicant / bio construct centuries earlier he was given the memory and also the personality of Theodore Roberts. In a sense he's talking to himself in that phone conversation I quoted.

However, they were not doing this in a regular basis as in your 1982 novel.

For regular uploads as something common in society you have to go to Clarke's "The City and the Stars" of 1956 as someone else (snap2grid?) has already pointed out. I feel dumb for having forgotten it. Maybe it was due to my state of rage when I read it, after having read Against the Fall of Night. It seemd vastly inferior becasue it left too little to the imagination of the reader.

To answer your question as to who would bother freezing out corpsicles, there is always the possibility of grand children and great grand children doing it out of curiosity. Or it could be part of tradition since many Earth societies have some form of ancestor worship. You thaw out gramps and granny, heal their ancient ills with more modern medicines, then put them back in a new improved hibernation cocoon because, you know, Earth (and the entire solar system) would get too crowded if they hung around too long and also, since we believe in ancestor worship we have to preserve ancestors well so that upcoming grand children and great grand children wil have a chance to talk to them too in 100, 200, 400, 800 years.

Even if I agree that a working model of a brain represents the truest representation of a human personality (and I might), the motivations of such an object would differ significantly from those of the original. What would a life-box want? Would it react similarly to outside stimulus? Could it remain sane? Would it resent being created? A rogue Alpha-level simulation in Alastair Reynolds' The Prefect is a good example of a mind-copy with totally different goals than it's original.

The physical pleasures of being a human far outweigh the intellectual pleasures (at least in my opinion.) In order to create a perfect copy of a human, you should also create a perfect copy of a human body, or at least ensconce it in a perfect simulated human environment, a la the digital Robinette Broadhead of Pohl's Gateway books. Even then the digital Broadhead had little in common with the flesh-and-blood.

Also note that I think a WikiLog provides a nice way for lots of bushy linking that's more pragmatic than a SemanticWeb kinda thing. http://webseitz.fluxent.com/wiki/WikiLog

Giving it a hires simulated body and a hires simulated environment would take care of those issues.

Now, the only problem is: would you be satisfied with a simulated environment? Where you knew everything was fake?

In any case, I think that a human 'simulant' might end up needing some serious therapy. After all, the original is presumably dead. And if the original is not dead, that could be even worse. The age-old question of "who am I" could be a real bear for a near-perfect copy.

Personally, the thing I'd like to add to a life box is a fairly sophisticated neural net that would train itself to be you. Possibly we could even have it pass a specialized Turing Test to demonstrate that it is indistinguishable from you in whatever range of responses was appropriate.

If we wanted to reincarnate you, we could (theoretically) create a clone child, then give the lifebox the task of training said child to be you. Success would be demonstrated by having the clone pass the same Turing-style test that the lifebox passed.

Is this the same as uploading? Not particularly. The nice thing is that it's easier (close to technically feasible now), and it doesn't rely on messy brain deconstruction and questionable reconstruction. The upload/download process always struck me as the equivalent of stripping an old car to its component parts while it's running, then rebuilding it from new parts (appropriately aged), also while it's running. Not easy, nor terribly safe. (the analogy, for those who missed it, is that brains are constantly changing state, and trying to deconstruct and reconstruct a complex structure that's moving and changing gets interesting).

It also doesn't bring up any ugly theological questions about souls and such, although the legal implications of this are fascinating. Who gets to be a person, when you can copy yourself? Or do we create version trees, and grant rights Polynesian style, where the closer you are to the root, the higher your rank in the tree?

Two points.

I am not who I was a decade ago; about 90% of the cells in my body are new (the old ones having died) and of those that persist, literally every molecule in them will have been replaced at some point in the preceding decade. I'm not a solid object, I'm an organizational pattern. If you define "me" as a physical object, then the "me" of 1991 or 2001 is, in fact, dead -- it no longer exists. (Corollary: continuity is over-rated.)

The living objects around me are in the same situation. Personally, I don't care about the authenticity of inanimate physical objects (like, say, this desk): all I care about the authenticity of is organizational patterns -- the pattern that the atoms of the desk conform to, or, for that matter, the pattern of atoms in motion that just happen to currently comprise my wife.

If you can simulate one human being, you can simulate n >= 1. So: no need to be alone in the box.

Who's going to bother to thaw them out and reanimate them, even if it's possible? Enough's enough. Let the new generation take the stage.

Warren Ellis touches on this subject in his SF graphic novel Transmetropolitan- in the future, cryogenic corpses are defrosted and speed-cloned into new bodies, because it's a fairly trivial exercise, and it's what they presumably paid for, right? When the shocked, newly-young ex-corpsicle is decanted from the tank, a bored functionary passes them a box of charity-shop clothes and the address of a Revival hostel, and mentions that the next bus is in five minutes, so if you don't mind... The revived corpsicle is then pushed out onto the street, to sink or swim. Most sink. The whole revival exercise is treated very much as a grudging covenant with the past- an unpleasant duty, done with a necessary minimum of effort.

Kudos to all the early references to uploading. Does anyone have a reference for a story where it's explicitly stated that the ghost in the machine is the same person they were in their meat incarnation, computationally speaking? Because AFAIK, Rudy Rucker has the distinction of being the first one to do so (or if you want to get fancy, was the first to invoke the Church-Turing thesis).[1]

From what I've seen glancing over the thread, no one has explicitly made a reference as to whose benefit the purpose of the life box redounds upon.

If the life box is primarily a conceit of the departed, a mausoleum dedicated to their life, a case could be made about including everything, the good, the bad, and the ugly. Those bad incidents are part of what make the departed who they are, after all.

But if the life box is for the living, something to remember the dead by (which is what I think the stated purpose of the life box is; it's far too primitive to encode any distinctive dynamic essence of a personality), maybe you would want to do a bit of selective editing.

[1]Kudos on Software, btw. A fantastic book, something that made me put Rudy Rucker into the 'buy on site' category. But it was the characters that did it for me, not the conceit of uploading or the sf background. Cobb Anderson, Sta-Hi, Annie, et. al., they were real people stuck this sf world, stuck in terribly plausible situations and responding in ways that really made you feel for them. At the time, I thought the dominant theme was despair, and how people handled it - Cobb, brilliant, bitter, and scared takes the sf option, Sta Hi, good for nothing and knowing it, and opting for nihilsm, Annie - the best of the lot - just wanting one last little taste of happiness. At the time, I thought I was looking at the new Robert Sheckley, not one of the heirs of Philip K. Dick :-)

The most likely destination for a corpsicle is a slice'n'dice upload. Everybody happy.

"Now, the only problem is: would you be satisfied with a simulated environment? Where you knew everything was fake?"

Since I believe I am already in a simulated reality, several levels deep I think I can answer the question: It's mostly OK, but could be better.

for Dirk and the transhumanists : Involuntary death is evil. Being "natural" does not make the situation better. I totally disagree with this : Death has nothing to do with good or evil which are human made. Death just occurs. Like rain or the wind.And complaining about weather is just stupid.

Not accepting death is what drives medicine, not to mention the Transhumanist agenda. Again : No. what drives medicine is fear or pain and the will to have a better life . What drives the transhumanists, I don't know but I think it is just a childish fear of rain.

Would anyone here reject a pill that would turn back the biological clock to 20, and opt for old age infirmity and death instead? Or worse, reject such a pill on behalf of everyone else? Yes, I would. People come, people go. Species come, species go. It's just a part of the natural evolution.What's the point in refusing it ? Accepting the world as it is is the begining of wisdom. If you accept the world as a fact, THEN, and only then you can try to change it for a better world. I don't buy the whole transhumanist faith because it's a faith : change heaven for a computer, spirit for code and here it is. The whole thing is just a brand new religion with neons and chips. A dog wearing a hat is still a dog. Plus your anti-ageing-pill is just a magic pill, and has nothing to do with science. I don't know if I have a soul. I don't know if I have a spirit of my own that will be uploaded (to heaven). But I know I have a flesh, body and meat.Science begins with materialism. I won't exchange my knowing for your faith.

For everybody else :I 've been very polite, not using any F nor S word. But I'm so tired of priests ... even electronic ones. Uploading your mind is very cool in SF like FTL or time travel. But there is no church of the time travellers in the real world and it's much more better. (BTW : there is a church of FTl and the worshippers are called trekkies, but it is an other story...)

S'funny most people here manage to use the "F or S words" and be civil.

Must be some transhumanist voodoo

Minor quibble - I've often heard things along the lines of "about 90% of the cells in my body are new (the old ones having died) and of those that persist, literally every molecule in them will have been replaced at some point in the preceding decade."

I suppose that's roughly true, but I do wonder why my tattoo is still there.

@ Dr. Rucker - I adore the program "Cellab", it's been on at least one of my machines for many years. Although I come from a maths background, I just run it for the pretty lights, and very pretty they are.

@ general discussion - obviously it wouldn't mean much to me if a simulation/computational copy of me continued after my death. But it would matter a great deal to the copy, and since I generally like people who resemble me, I would wish it health and long life. And if I'm any use to other people, then so would my copy be. If not, well nobody seems to mind this version of me..

I suspect a copy of me would be reasonably content within a simulated environment - after all, the one I find myself in at the moment is largely a social and engineering construct. From where I type this, there is literally nothing within sight that would be recognised by a Neolithic human. Even the plants visible in the nearby gardens (by eerie orange lighting) are alien imports to Sussex. I doubt that "it ain't natural" would be a huge problem.

There's a huge difference between transhumanism and religion - Even at their most rigorous religions do not propose a plausible physical mechanism for their various afterlives. (Let's not bring Tipler into this, mmkay?). Transhumanists point to a bunch of emerging technologies and make relatively reasonable predictions. If later work shows these predictions to be false, bang goes the theory. That's just not what priests do.

About the only thing they have in common is many of them wear black.

And if, afterwards, I wake up and discover I'm a caterpillar deaming all this, I'm going to be very cross indeed.

I'm a big fan of "White Light," "Master of Space & Time," and "Spaceland," looking forward to reading "Nested Scrolls."

But I think John Varley may have preceded you on the consciousness downloading front with "Overdrawn at the Memory Bank" (1976).

And what about Raymond Z. Gallun's People Minus X (1957)?

Yes, mellow comments indeed. And Charlie's even in the house. I finished "Missle Gap" today, what a great story. I loved how there kept being a fresh reveal in each little section.

Someone asked about the film option for SOFTWARE. That's a long story, and I go into it in my autobio, NESTED SCROLLS. Short version, it was under option to Phoenix Pictures for ten years and they did ten scripts, which cost them about a million dollars. My option cut was maybe 10% of that. But the film never got done. Someday maybe.

Someone else mentioned playing with my old program CA Lab, also known as Cellab. Glad to hear that.

Even better, actually is a continuous valued Cellular Automata program I worked on calld CAPOW. You can find both of these for free at www.rudyrucker.com/software

"Does anyone have a reference for a story where it's explicitly stated that the ghost in the machine is the same person they were in their meat incarnation, computationally speaking? "

Unless I'm mistaken this is the case for Clarke's "The City and the Stars" of 1956. Except that he didn't employ the word software, which was not in current use in SF lit at the time.

I don't think creating sufficiently engaging simulated environments for uploaded minds will be that big of a problem. In fact, I think that good old-fashioned squishy brains will find such environments very compelling as well.

Just look at the millions of people who are addicted to computer games today. The next few decades will see hyper-realistic simulations that engage all of the senses, regardless of whether or not much progress is made in the direction of mind uploading. If and when it becomes possible, the environments will be ready.

(Corollary: continuity is over-rated.)

Well, yes, the physical continuity, but there is still some sense of continuity. I think the best word for that is 'self', even though my self from twenty years ago is very much a different thing than now.

Hm, now that I think of it, it's much the same as the cells being replaced. There's a small change going on all the time, but the change is (usually) unnoticeable within short time periods.

Any simulation of you is conscious? Any simulation of you is you? Physical continuity doesn't matter? I don't believe any of it. I would always bet on the substrate of consciousness being something physical and inherently a unity, even if it had to be something that sounded outlandish - say, a dark-energy vortex coupled to the motions of microtubular electrons - rather than believing in the crypto-dualism of standard computationalist philosophy of mind. Clearly neurons do implement functionally relevant computations; I'm just asserting that the "physical correlate" of conscious states is not going to be a coarse-grained, loosely distributed, classical computation. The belief that consciousness is nothing but computation is just a symptom of (1) the unfinished state of basic science (2) the human capacity to tune out problematic facts.

Nonetheless, the idea of the digital mind is very zeitgeisty, it's halfway to the truth, it's ubiquitous in popular culture, it has an intensely strong grip on influential futurist subcultures, and most of all, we do know how to build highly capable digital computers and, increasingly, how to interface them to living flesh.

I know that Charlie and Rudy, being intellectually flexible science-fiction writers who can distance themselves from the culture that surrounds them, and who are at home imagining all sorts of possible worlds, could certainly deal with the truth turning out to be that consciousness isn't computation, but something a lot more inherently physical; even though they have both written books based on the opposite premise. However, I can't say the same for the "transhumanist public" at large, many of whom are extremely invested in the notion of digital immortality through mind uploading. To them it appears to be common sense, just another thing that mainstream society has a mental block about, like atheism and cryonics.

So I wonder a lot about where that attitude leads, when it is joined with the capacity to make simulacra even more convincing that the lifebox described here. You know, maybe next year science will put together and validate the dark-energy-vortex theory of consciousness, it will all make sense to everyone, and the possibilities of digital mind imitation and augmentation will play out without anyone imagining that they can actually become a digital computer, etc. If the physical basis of consciousness really is some fancy quantum-biological effect, maybe it's unlikely that we would ever get to the point of having the capacity to digitally simulate a brain, without first having discovered that effect; and so perhaps the gung-ho uploading subculture is inevitably just a transitional phenomenon based on ignorance, not something that will ever have the power to act on its ontological mistakes.

Or perhaps it will get that power. Already it would be possible for someone to invest in the construction of an elaborate lifebox, in the belief that they were genuinely transferring their "functionalist essence" to the new computational system. Already many people who are signed up for cryonic suspension expect to be resurrected, not by reanimation of their body, but by resimulation of their mind. The absolute worst way to go wrong in this direction, would be to have a singularity governed by intelligent agents with an ontologically wrong theory of mind. A version of this outcome that has comic-book simplicity and extremity: they might kill everyone and create simulations in their place, believing that this was a form of survival.

Reality might be more subtle, more distributed; perhaps the main thing to fear about a singularity is the far cruder threat of dominant intelligences who care nothing at all for human existence, rather than wannabe benevolent intelligences who just have the wrong definition of "human". But I do think this faith that consciousness equals computation, and the willingness to draw the implications and act upon them, and the fact that this belief draws life from the current state of our science, must lead to bad outcomes somewhere down the line, just as any major systematic error regarding the nature of reality is likely to create problems if it is reproduced all across a culture.

Dirk Bruere said: "Plus your anti-ageing-pill is just a magic pill, and has nothing to do with science."

Well, hardly. In point of fact biologists have developed techniques that reverse the aging process in mice, by altering telomeres.

Ref: http://www.wired.com/wiredscience/2010/11/mouse-aging-reversal/

The immunosuppressant Rapamycin has been shown to slow aging in mice. See http://www.nature.com/nature/journal/v460/n7253/full/nature08221.html

Published, ironically, in the peer reviewed journal "Science".

Of course it will be many years before either technique is viable even if the research turns out to be corrrect.

But to say it "isn't Science" is just nonsense.

Also the fallacy of idea that we are separate "selves" was pointed out thousands of years ago by, among others, the Buddhists, and modern science has shown that they were pretty much correct.

The observable fact is that your brain is connected, physically, to your body and your body is connected, physically, to the world you live in. And they all effect each other. Air is, after all, a perfectly physical thing and we are all connected to each other via it, or we could not talk to one another.

Here's a simple experiment. Shake your head back and forth fairly vigorously and you will feel a weight flopping around in the top of your head. That weight is your brain, and the point is that you feel it from outside, you feel a weight in your head moving, you don't feel the whole world shaking while your head remains still.

Or listen to monophonic music with a pair of headphones and you will hear music playing right in the middle of your head if your hearing is close to normal. If your "self" is located in there how can that happen?

And if you can forget your preconceptions for a few minutes and just watch what is going on you may notice that there is no actual sensation of self. Everything you identify as your sense of "self" is actually some feeling in your body, often tension in the muscles of your scalp and head.

Just as you cannot touch the tip of your finger with that particular figure, and you cannot observe a telescope with that particular telescope, you cannot sense your "self" with your own "self". But if we cannot in principal observe something then we have no right to claim that it exists. On that philosophical point the whole of modern quantum physics is founded.

I am not being "mystical" here. It is the belief in a "self" separated from the rest of the universe that is the mystical concept.

"Involuntary death is evil. Being "natural" does not make the situation better."

I totally disagree with this : Death has nothing to do with good or evil which are human made. Death just occurs. Like rain or the wind.And complaining about weather is just stupid."

Unless one can do something about it. Those who are anti-transhumanist tend to be against the idea of doing something about it.

I have been told that rendering photorealistic 3D environments would take around a petaFLOPS. So that puts it about 10-15 years away for a PC. Current top of the range graphics cards can do approaching 10TFLOPS

"But to say it "isn't Science" is just nonsense."

Playing devils advocate, any science or tech that does not yet exist "isn't science". We make it happen - we create the reality from "science fiction".

As for those who, on this blog, would deny us all the fruits of anti-aging technologies because of their personal religion/ideology... well, consider me saying something extremely impolite.

I've long felt that the term "Minister Mentor"'s logical conclusion is keeping Lee Kuan Yew in a computer.

The majority of the data may well be stored in the brain, but pouring petrol over your legs and setting them on fire will also change your personality.

"The majority of the data may well be stored in the brain, but pouring petrol over your legs and setting them on fire will also change your personality."

Yes - you cease to be Human. Seriously, every experience changes your personality

Solved by the noted philosophers Nobby and Sergeant Colon: bits of other people's tattoos replace the lost parts of yours. (",)

Why is the tattoo still there? Because it's not part of your biology. It's dead material within your flesh, and the body's metabolism can neither carry it off, nor repair it. That's why it looks exactly as it did the day you had it done.

(It is unchanged, isn't it?)

Given good health, I wonder how long it would take for the body's natural processes to actually repair and replace all the damage that a tat embodies. I suspect a very long time.

When I was 7 or 8 I accidentally stuck the point of a pencil into my hand. The blue-black tattoo of graphite is still there after almost 60 years, and doesn't look ready to go away. The marks of a real tat are probably a lot more permanent.

The Locker Project, run by the company Singly, might be a good start for this Lifebox thingie.

https://singly.com http://lockerproject.org

And it seems like the whole project is run by good people!

Walter Jon Williams' "Aristoi" developed that idea a bit. What if there's more than one box, and more than one "you"?

In the novel, some people had more than one physical body and more than one full simulation, all operating in different locations or virtual worlds. Meatware, hardware, it didn't make any difference legally, socially, or culturally.

Where most people seem to be satisfied with a self that developed by random chance, the Aristoi chose who they wanted to be, and their multiple selves made it so.

Charlie wrote: "...if I remove one of your kidneys, or one of your legs, you may be (justifiably) annoyed, but you'll still be you." Is it really this black and white? As Rudy pointed out in his post, "We live in our entire bodies--in our hearts, in our muscles, in our organs." Friends that have had heart transplants say that they don't feel like the same person anymore.

And I find that the changes in my physical form over the years have made me feel like a different person. I now have less testosterone in my bloodstream most of the time, and my metabolism runs a little slower. My appetite is smaller, and I like different foods. And the injuries to the nerves in my lower back that make it difficult to stand and walk for long periods have changed the routines of my life in a lot of ways. For most of my life I ran or jogged at least 4 times a week, went on long hikes in the woods, and walked whenever I needed to go less than a mile or two. I can't do any of that now, and I'm reminded of it constantly in little ways. In fact, I'd bet that the accumulation of little changes over time have changed my personality more than a major change like an organ transplant would.

My father Embry Cobb Rucker had a very early coronary bypass operation in the 1970s, and was never the same peson after that.

This was a transreal inspiration for the character Cobb Anderson in my novel SOFTWARE. Cobb gets a completely new android body.

For me, transrealism involves exaggerating a bit of my personal experience into a full-blown SF trope. Popping the facts into higher relief.